Difference between revisions of "CNP RL"

(→Task Structure Detail) |

(→Stimuli) |

||

| Line 69: | Line 69: | ||

Performance feedback during training and PRLT appears as "Correct!" in green font or "Incorrect!" in red font above the image pairs. | Performance feedback during training and PRLT appears as "Correct!" in green font or "Incorrect!" in red font above the image pairs. | ||

| + | |||

| + | Need to add font info and stim presentation timings: stim, feedback, ISI | ||

=== Dependent Variables === | === Dependent Variables === | ||

Revision as of 20:07, 1 June 2011

go back to HTAC

Contents

Basic Task Description

The CNP "RL" task contains two tasks embedded in one - a probabilistic selection task (PST) and a p robabilistic reversal learning task (PRLT). These tasks both involve reinforcement learning and were designed to assess feedback sensitivity and behavioral flexibility, respectively. Both have been extensively used to determine reinforcement learning biases and behavioral flexibility in both healthy and patient populations. Initially designed by Michael Frank, the probabilistic selection task is specifically used to determine participants' tendencies to learn either from positive or negative feedback (e.g., Frank et al., 2004). The probabilistic reversal learning task, originally developed by Trevor Robbins and Robert Rogers (Lawrence et al, 1999; Swainson et al., 2000), examines participants' ability to adapt to changes in learned contingencies. Both tasks involve initial training periods in which participants must learn appropriate responses given probabilistic feedback ("noisy" feedback).

Probabilistic Selection Task

In the PST, three pairs of cards are presented and participants must learn the "correct" card in each pair. Each pair is associated with different probabilities. For pair AB, choosing A is associated with positive feedback 80% of the time (B 20% of the time). For CD, choosing C leads to positive feedback 70% of the time, and E in EF 60% of the time. Over time, participants learn to choose the higher probability cards - choosing A, C, and E most of the time. Learning may be achieved either by choosing the card associated with positive feedback or by avoiding the card associated with negative feedback. After training, to assess whether participants learn more from positive or negative feedback, the cards are recombined in a "probe" phase, such that each card is paired with every other card. Participants are required to make a choice given these novel pairs without receiving feedback. A bias towards learning from positive feedback is determined by the number of times participants choose the highest probability card (the card receiving the most positive feedback) relative to the others (A vs. B, A vs. C, A vs. D, A vs. E), and a bias towards learning from negative feedback is derived by the number times that the lowest probability card is avoided (B vs. C, B vs. E, B vs. F). The tendency to choose A versus avoiding B is associated with several neuropsychiatric phenotypes, most notably observed in Parkinson's patients (Frank et al., 2004), and evidence for genetic associations has been observed (Frank et al., 2007).

Probabilistic Reversal Learning Task

The PRLT includes initial learning stages (acquisition) followed by reversals of previously correct associations. Although there are several variants of the PRLT, the most commonly used involves choosing the correct image in a pair of simultaneously presented images given probabilistic feedback. Typically, one of the images is correct 80% of the time. After learning criterion has been reached (e.g., 3 consecutively correct responses), the probabilities of receiving positive feedback reverse such that the image that was previously correct 80% of the time is now only correct 20% of the time. The errors made after the reversal are often termed "perseverative errors". These errors are a measure of participants' ability to adapt to contingency changes, and they are the most commonly used indices of reversal learning performance. Another common measure is failure or success in reaching learning criteria during acquisition and reversal stages.

Task Procedure

For general testing procedure, please refer to LA2K General Testing Procedure [here?].

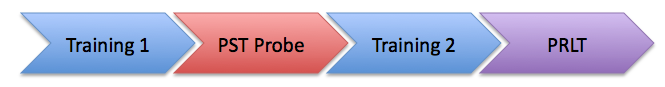

In the LA2K "RL" task, participants perform the PST followed by the PRLT along with separate training sessions prior to each (see Figure 1).

Training: Training sessions consist of probabilistic object discrimination in which participants must select which of two simultaneously presented images (abstract visual patterns) are "correct". Four pairs of images are randomly presented with the following respective probabilities: 100/0, 80/20, 70/30, and 60/40. Subjects are trained to criteria (70%, 65%, 60%, and 55% correct, respectively).

PST probe: In the PST phase (i.e., "probe), the pairs are recombined such that each image is presented along with all of the other images in the training (28 in all, including the original pair). Each pair is presented once without feedback. Whether subjects learn more using positive or negative feedback is determined by how often the the higher probability item in the pair is chosen (learning from pos. feedback) versus how often the lower probability item in the pair is avoided (learning from neg. feedback).

PRLT: The reversal phase occurs after an additional training phase (Training 2) that is identical to the first training session. During reversal, the correct image in half of the original 4 pairs is reversed. These are the 100/0 and 70/30 pairs. Each of the four pairs is presented 10 times (40 trials total).

Instructions: Subjects are instructed to select the correct image in a pair of images that are presented to the left and right of the screen. Left/right button presses correspond to the images selected. They are told that feedback will not be perfectly reliable and that sometimes their choice will be wrong even though it was correct many times in the past. "Some images are more likely to be correct than others; try to choose the one that is most likely to be correct."

Task Structure Detail

This is what we had worked on before, but could use updating. We'd like to capture a schema that can handle each of the tasks in the CNP, so please think general when editing -fws

- Stimulus Characteristics

- sensory modality: visual

- functional modality : spatial/categorical

- presentation modality: computer (eprime)

- Performance Feedback Characteristics

- sensory modality: visual

- functional modality: verbal

- reward (e.g., none, points, money, food): none

- description: feedback appears as "Correct!" in green font or "Incorrect!" in red font above the image pairs.

- Response Characteristics

- response required: yes

- effector modality: manual

- functional modality: keypress

- response options: forced choice (left/right)

- response collection: keyboard

- Assessment/Control Characteristics

- Timing: self-paced,

- Run Time: 15 mins

Task Schematic

Schematic of the RL task to be inserted

Task Parameters Table

Stimuli

Eight abstract computer-generated images (ArtMatic Pro, U&I Software LLC, http://uisoftware.com) were used in the tasks. The following are some examples:

Performance feedback during training and PRLT appears as "Correct!" in green font or "Incorrect!" in red font above the image pairs.

Need to add font info and stim presentation timings: stim, feedback, ISI

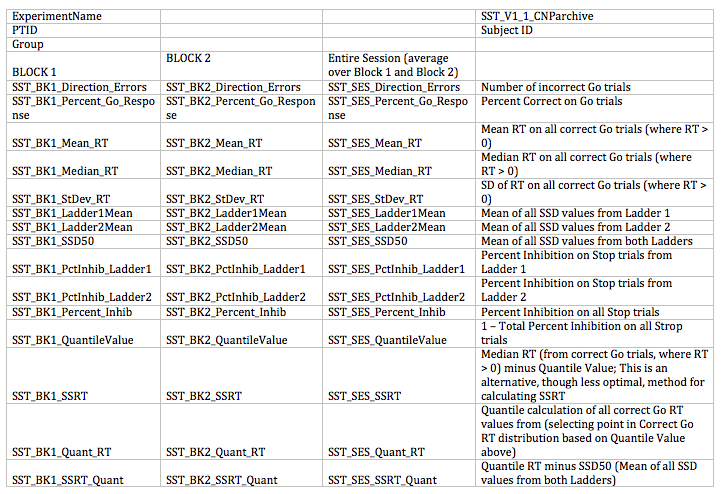

Dependent Variables

The primary dependent variable is ...

Table of all available variables.

Cleaning Rules

Code/Algorithms

History of Checking Scoring:

Data Distributions

References

Frank MJ, Seeberger LC, O'Reilly RC. By carrot or by stick: cognitive reinforcement learning in Parkinsonism. Science 2004;306:1940-1943

Frank MJ, Moustafa AA, Haughey HM, Curran T, Hutchison KE. Genetic triple dissociation reveals multiple roles for dopamine in reinforcement learning. Proc Natl Acad Sci USA 2007;104:16311-16316

Lawrence AD, Sahakian BJ, Rogers RD, Hodges JR, Robbins TW. Discrimination, reversal, and shift learning in Huntington's disease: mechanisms of impaired response selection. Neuropsychologia 1999;37:1359-1374.

Swainson R, Rogers RD, Sahakian BJ, Summers BA, Polkey CE, Robbins TW. Probabilistic learning and reversal deficits in patients with Parkinson's disease or frontal or temporal lobe lesions: possible adverse effects of dopaminergic medication. Neuropsychologia 2000;38:596-612